The XGBoost and LightGBM modeling engines both enable distributing the computations needed to train a single boosted tree model across several CPU cores. Similarly, the tidymodels framework enables distributing model fits across cores. The natural question, then, is whether tidymodels users ought to make use of the engine’s parallelism implementation, tidymodels’ implementation, or both at the same time. This blog post is a scrappy attempt at finding which of those approaches will lead to the smallest elapsed time when fitting many models.

The problem

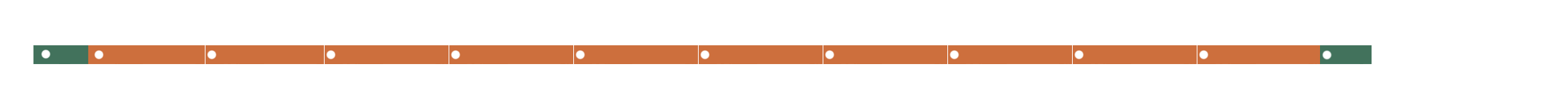

For example, imagine the case where we evaluate a single model against 10 resamples. Doing so sequentially might look something like this, where each dotted orange segment indicates a model fit:

The x-axis here depicts time. The short green segments on either side of the orange segments indicate the portions of the elapsed time allotted to tidymodels “overhead,” like checking arguments and combining results. This graphic depicts a sequential series of model fits, where each fit takes place one after the other.

If you’re feeling lost already, a previous blog post of mine on how we think about optimizing our code in tidymodels might be helpful.

1) Use the engine’s parallelism implementation only.

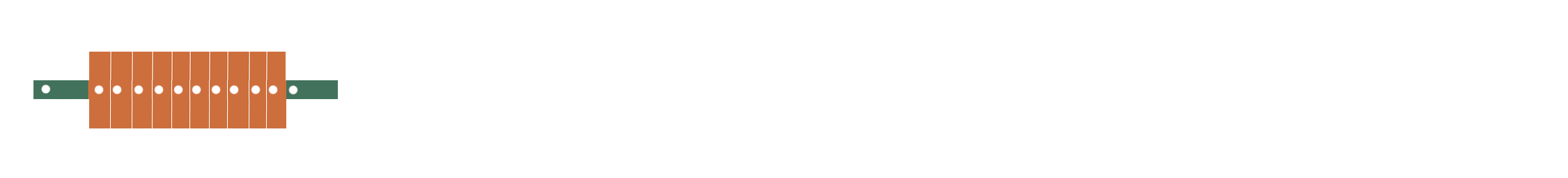

The XGBoost and LightGBM engines implement their own parallelism frameworks such that a single model fit can be distributed across many cores. If we distribute a single model fit’s computations across 5 cores, we could see, best-case, a 5-fold speedup in the time to fit each model. The model fits still happen in order, but each individual (hopefully) happens much quicker, resulting in a shorter overall time:

The increased height of each segment representing a model fit represents how the computations for each model fit are distributed across multiple CPU cores. (I don’t know. There’s probably a better way to depict that.)

2) Use tidymodels’ parallelism implementation only.

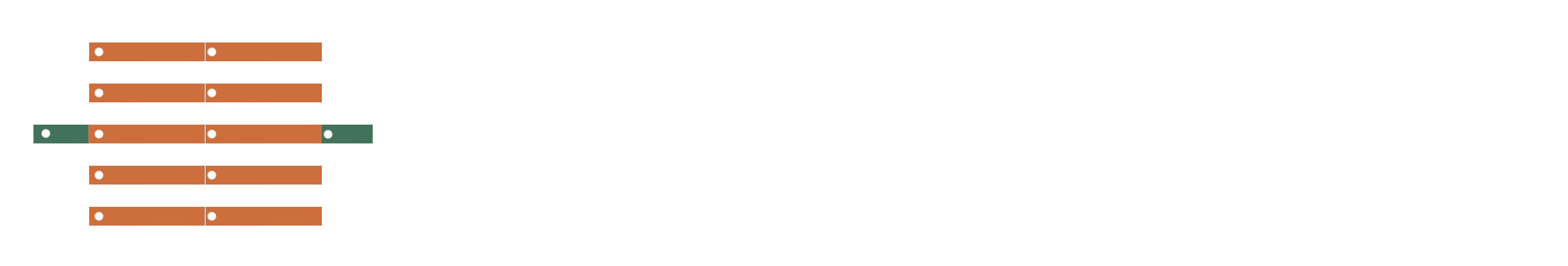

The tidymodels framework supports distributing model fits across CPU cores in the sense that, when fitting n models across m CPU cores, tidymodels can allot each core to fit n/m of the models. In the case of 10 models across 5 cores, then, each core takes care of fitting two:

Note that a given model fit happens on a single core, so the time to fit a single model stays the same.

3) Use both the engine’s and tidymodels’ parallelism implementation.

Why can’t we do both 1) and 2)? If both parallelism approaches play nicely with each other, and neither of them was able to perfectly distribute its computations across all of the available resources, then we’d see that we could get some of the benefits from both approach and get the maximal computational performance out of our available resources:

In reality, parallelism frameworks come with their fair share of overhead, and often don’t play nicely with each other. It’d be nice to know if, in practice, any of these three approaches stand out among the others as the most performant way to resample XGBoost and LightGBM models with tidymodels. We’ll simulate some data and run some quick benchmarks to get some intuition about how to best parallelize parameter tuning with tidymodels.

This post is based on a similar idea to an Applied Predictive Modeling blog post from Max Kuhn in 2018, but is generally:

- less refined (Max tries out many different dataset sizes on three different operating systems, while I fix both of those variables here),

- uses modern implementations, incl. tidymodels instead of caret, future instead of foreach, and updated XGBoost (and LightGBM) package versions, and

- happens to be situated in a modeling context more similar to one that I’m currently benchmarking for another project.

I’m running this experiment on an M1 Pro Macbook Pro with 32GB of RAM and 10 cores, running MacOS Sonoma 14.4.1. We’ll create a 10,000-row dataset and partition it into 10 folds for cross-validation, tuning among a set of 10 possible candidate values, resulting in 100 9,000-row model fits per call to tune_grid().

Setup

Starting off by loading needed packages and simulating some data using the sim_classification() function from modeldata:

We’d like to predict class using the rest of the variables in the dataset:

dat# A tibble: 10,000 × 16

class two_factor_1 two_factor_2 non_linear_1 non_linear_2 non_linear_3

<fct> <dbl> <dbl> <dbl> <dbl> <dbl>

1 class_2 -0.329 -1.28 0.186 0.578 0.732

2 class_2 0.861 -0.389 0.106 0.701 0.647

3 class_2 -0.461 -1.69 0.193 0.337 0.814

4 class_2 2.75 1.35 -0.215 0.119 0.104

5 class_2 0.719 0.127 -0.479 0.878 0.334

6 class_2 -0.743 -1.36 0.480 0.0517 0.400

7 class_2 0.805 0.447 -0.947 0.342 0.382

8 class_2 0.669 1.23 0.682 0.461 0.924

9 class_2 0.887 0.593 0.701 0.772 0.297

10 class_1 -1.14 0.353 -0.729 0.819 0.331

# ℹ 9,990 more rows

# ℹ 10 more variables: linear_01 <dbl>, linear_02 <dbl>, linear_03 <dbl>,

# linear_04 <dbl>, linear_05 <dbl>, linear_06 <dbl>, linear_07 <dbl>,

# linear_08 <dbl>, linear_09 <dbl>, linear_10 <dbl>form <- class ~ .Splitting the data into training and testing sets before making a 10-fold cross-validation object:

set.seed(1)

dat_split <- initial_split(dat)

dat_train <- training(dat_split)

dat_test <- testing(dat_split)

dat_folds <- vfold_cv(dat_train)

dat_folds# 10-fold cross-validation

# A tibble: 10 × 2

splits id

<list> <chr>

1 <split [6750/750]> Fold01

2 <split [6750/750]> Fold02

3 <split [6750/750]> Fold03

4 <split [6750/750]> Fold04

5 <split [6750/750]> Fold05

6 <split [6750/750]> Fold06

7 <split [6750/750]> Fold07

8 <split [6750/750]> Fold08

9 <split [6750/750]> Fold09

10 <split [6750/750]> Fold10For both XGBoost and LightGBM, we’ll only tune the learning rate and number of trees.

spec_bt <-

boost_tree(learn_rate = tune(), trees = tune()) %>%

set_mode("classification")The trees parameter greatly affects the time to fit a boosted tree model. Just to be super sure that analogous fits are happening in each of the following tune_grid() calls, we’ll create the grid of possible parameter values beforehand and pass it to each tune_grid() call.

set.seed(1)

grid_bt <-

spec_bt %>%

extract_parameter_set_dials() %>%

grid_latin_hypercube(size = 10)

grid_bt# A tibble: 10 × 2

trees learn_rate

<int> <dbl>

1 1175 0.173

2 684 0.0817

3 558 0.00456

4 1555 0.0392

5 861 0.00172

6 1767 0.00842

7 1354 0.0237

8 42 0.0146

9 241 0.00268

10 1998 0.222 For both LightGBM and XGBoost, we’ll test each of those three approaches. I’ll write out the explicit code I use to time each of these computations; note that the only thing that changes in each of those chunks is the parallelism setup code and the arguments to set_engine().

Write a function that takes in a parallelism setup and engine and returns a timing like those below!😉 Make sure to “tear down” the parallelism setup after.

For a summary of those timings, see Section 5.

XGBoost

First, testing 1) engine implementation only, we use plan(sequential) to tell tidymodels’ parallelism framework not to kick in, and set nthread = 10 in set_engine() to tell XGBoost to distribute its computations across 10 cores:

plan(sequential)

timing_xgb_1 <- system.time({

res <-

tune_grid(

spec_bt %>% set_engine("xgboost", nthread = 10),

form,

dat_folds,

grid = grid_bt

)

})[["elapsed"]]Now, for 2) tidymodels implementation only, we use plan(multisession, workers = 10) to tell tidymodels to distribute its computations across cores and set nthread = 1 to disable XGBoost’s parallelization:

plan(multisession, workers = 10)

timing_xgb_2 <- system.time({

res <-

tune_grid(

spec_bt %>% set_engine("xgboost", nthread = 1),

form,

dat_folds,

grid = grid_bt

)

})[["elapsed"]]Finally, for 3) both parallelism implementations, we enable parallelism for both framework:

plan(multisession, workers = 10)

timing_xgb_3 <- system.time({

res <-

tune_grid(

spec_bt %>% set_engine("xgboost", nthread = 10),

form,

dat_folds,

grid = grid_bt

)

})[["elapsed"]]We’ll now do the same thing for LightGBM.

LightGBM

First, testing 1) engine implementation only:

plan(sequential)

timing_lgb_1 <- system.time({

res <-

tune_grid(

spec_bt %>% set_engine("lightgbm", num_threads = 10),

form,

dat_folds,

grid = grid_bt

)

})[["elapsed"]]Now, 2) tidymodels implementation only:

plan(multisession, workers = 10)

timing_lgb_2 <- system.time({

res <-

tune_grid(

spec_bt %>% set_engine("lightgbm", num_threads = 1),

form,

dat_folds,

grid = grid_bt

)

})[["elapsed"]]Finally, 3) both parallelism implementations:

plan(multisession, workers = 10)

timing_lgb_3 <- system.time({

res <-

tune_grid(

spec_bt %>% set_engine("lightgbm", num_threads = 10),

form,

dat_folds,

grid = grid_bt

)

})[["elapsed"]]Putting it all together

At a glance, those timings are (in seconds):

# A tibble: 3 × 3

approach xgboost lightgbm

<chr> <dbl> <dbl>

1 engine only 988.46 103.13

2 tidymodels only 133.23 23.328

3 both 132.59 23.279At least in this context, we see:

- Using only the engine’s parallelization results in a substantial slowdown for both engines.

- For both XGBoost and LightGBM, just using the tidymodels parallelization vs. combining the tidymodels and engine parallelization seem comparable in terms of timing. (This is nice to see in the sense that users don’t need to adjust their tidymodels parallelism configuration just to fit this particular kind of model; if they have a parallelism configuration set up already, it won’t hurt to keep it around.)

- LightGBM models train quite a bit faster than XGBoost models, though we can’t meaningfully compare those fit times without knowing whether performance metrics are comparable.

These are similar conclusions to what Max observes in the linked APM blog post. A few considerations that, in this context, may have made tidymodels’ parallelization seem extra advantageous:

- We’re resampling across 10 folds here and, conveniently, distributing those computations across 10 cores. That is, each core is (likely) responsible for the fits on just one fold, and there are no cores “sitting idle” unless one model fit finishes well before than another.

- We’re resampling models rather than just fitting one model. If we had just fitted one model, tidymodels wouldn’t offer any support for distributing computations across cores, but this is exactly what XGBoost and LightGBM support.

- When using tidymodels’ parallelism implementation, it’s not just the model fits that are distributed across cores. Preprocessing, prediction, and metric calculation is also distributed across cores when using tidymodels’ parallelism implementation. (Framed in the context of the diagrams above, there are little green segments dispersed throughout the orange ones that can be parallelized.)

Session Info

sessioninfo::session_info(

c(tidymodels_packages(), "xgboost", "lightgbm"),

dependencies = FALSE

)─ Session info ───────────────────────────────────────────────────────────────

setting value

version R version 4.3.3 (2024-02-29)

os macOS Sonoma 14.4.1

system aarch64, darwin20

ui X11

language (EN)

collate en_US.UTF-8

ctype en_US.UTF-8

tz America/Chicago

date 2024-05-13

pandoc 3.1.12.3 @ /opt/homebrew/bin/ (via rmarkdown)

─ Packages ───────────────────────────────────────────────────────────────────

package * version date (UTC) lib source

broom * 1.0.5.9000 2024-05-09 [1] Github (tidymodels/broom@b984deb)

cli 3.6.2 2023-12-11 [1] CRAN (R 4.3.1)

conflicted 1.2.0 2023-02-01 [1] CRAN (R 4.3.0)

dials * 1.2.1 2024-02-22 [1] CRAN (R 4.3.1)

dplyr * 1.1.4 2023-11-17 [1] CRAN (R 4.3.1)

ggplot2 * 3.5.1 2024-04-23 [1] CRAN (R 4.3.1)

hardhat 1.3.1.9000 2024-04-26 [1] Github (tidymodels/hardhat@ae8fba7)

infer * 1.0.6.9000 2024-03-25 [1] local

lightgbm 4.3.0 2024-01-18 [1] CRAN (R 4.3.1)

modeldata * 1.3.0 2024-01-21 [1] CRAN (R 4.3.1)

parsnip * 1.2.1.9001 2024-05-09 [1] Github (tidymodels/parsnip@320affd)

purrr * 1.0.2 2023-08-10 [1] CRAN (R 4.3.0)

recipes * 1.0.10.9000 2024-04-08 [1] Github (tidymodels/recipes@63ced27)

rlang 1.1.3 2024-01-10 [1] CRAN (R 4.3.1)

rsample * 1.2.1 2024-03-25 [1] CRAN (R 4.3.1)

rstudioapi 0.16.0 2024-03-24 [1] CRAN (R 4.3.1)

tibble * 3.2.1 2023-03-20 [1] CRAN (R 4.3.0)

tidymodels * 1.2.0 2024-03-25 [1] CRAN (R 4.3.1)

tidyr * 1.3.1 2024-01-24 [1] CRAN (R 4.3.1)

tune * 1.2.1 2024-04-18 [1] CRAN (R 4.3.1)

workflows * 1.1.4.9000 2024-05-01 [1] local

workflowsets * 1.1.0 2024-03-21 [1] CRAN (R 4.3.1)

xgboost 1.7.7.1 2024-01-25 [1] CRAN (R 4.3.1)

yardstick * 1.3.1 2024-03-21 [1] CRAN (R 4.3.1)

[1] /Users/simoncouch/Library/R/arm64/4.3/library

[2] /Library/Frameworks/R.framework/Versions/4.3-arm64/Resources/library

──────────────────────────────────────────────────────────────────────────────